Introduction

Artificial Intelligence (AI) has become the engine behind today’s digital transformation. From powering recommendation systems and self-driving cars to enabling real-time fraud detection in banking, the need for faster and more efficient computation is higher than ever. However, traditional processors such as CPUs and even GPUs, though powerful, often struggle to keep pace with the demands of large-scale inference tasks. That’s where specialized architectures come into play.

Among the most promising developments in this space is Groq, a company that has redefined how AI workloads are executed. Instead of following the conventional approach of complex instruction sets or parallel threads, this system introduces a radically simplified yet powerful architecture designed for deterministic performance. Its Linear Processing Unit (LPU) changes how developers think about inference, throughput, and latency.

This article will walk you through its mechanism, features, and ecosystem, compare it with competitors, explore its real-world applications, and explain why it is regarded as one of the strongest innovations in AI acceleration.

What Exactly is Groq?

GROQ is a California-based technology company founded by Jonathan Ross, who was also one of the brains behind Google’s Tensor Processing Unit (TPU). Ross and his team envisioned a computing architecture that could handle large-scale AI inference at extreme speed with predictability — something GPUs and TPUs often cannot guarantee due to their reliance on thread scheduling and resource sharing.

The outcome was the Linear Processor Unit (LPU), which simplifies computation by removing scheduling overhead. Instead of managing multiple threads or competing instructions, the LPU executes instructions in a straight pipeline, ensuring that each calculation happens at a guaranteed speed and order. This deterministic approach has massive implications for industries like healthcare, autonomous vehicles, and finance, where microseconds can determine accuracy, safety, and even profitability.

How the Mechanism Works (Explained Simply)

At first glance, many people confuse Groq’s system with GPUs because both deal with high-performance parallel workloads. But the underlying design is very different.

- Traditional GPUs: Work with thousands of small cores, executing threads in parallel. While this offers high throughput, it comes with complexity — instructions are not always predictable, memory access can vary, and performance depends on thread scheduling.

- Linear Processing Unit: Instead of multiple unpredictable threads, the LPU runs a deterministic pipeline. Each step flows into the next without delay. It’s like comparing a crowded multi-lane highway (GPU) with a high-speed bullet train on a dedicated track (LPU).

The benefits include:

- Predictability – Developers know exactly how long a computation will take.

- Low Latency – Real-time workloads like voice recognition or fraud detection run with minimal lag.

- Efficiency – Less wasted energy compared to GPUs constantly juggling threads.

- Simplified Programming – Because there’s no complex scheduling, coding for the LPU is more straightforward.

In simple terms, Groq has created a system where AI computations are not just faster but also guaranteed in timing, a feature that makes it stand out for mission-critical applications.

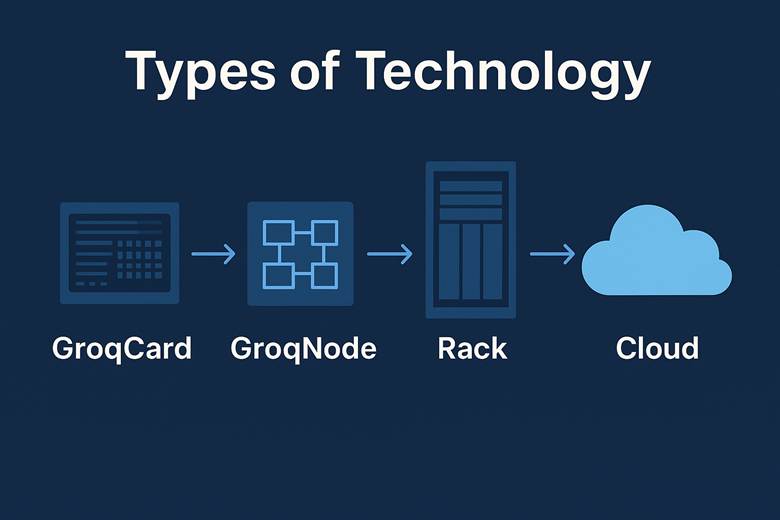

Features and Types of the Technology

Groq’s system is more than one chip; it is built up as a scalable system that scales from one card up a full rack and then into the cloud. That makes it available for use by any size of company based on their requirements.

1. GROQ CARD

One accelerator card that can slot right into prevailing servers with minimal setup. It is perfect for companies considering AI inference or conducting mid-scale jobs. It has a budget-friendly entry point and allows organizations to begin using AI acceleration without redoing their infrastructure.

2. GROQ NODE

A more powerful unit that combines multiple GroqCards into one dedicated system. This is built for organizations needing consistent high-performance inference. Think of it as a standalone AI engine—perfect for heavy workloads without slowing down the rest of your servers.

3. GROQ RACK

A rack-level scale that can handle many nodes and scale up to a full data center solution. It is designed for enterprises and government institutions and research centers handling vast data (even petabytes). It is all about stability and scalability and delivering data-centre level power within a single integrated system.

4. GROQ CLOUD

Cloud infrastructure where developers are able to run workloads with neither physical infrastructure purchased nor maintained. It puts state-of-the-art acceleration within greater reach of startups, students, and researchers. You can now test, try, and scale AI models at low cost from the cloud using GroqCloud.

5.GROQ WARE

The unifying software layer. GroqWare is the software tools, docs, and engineering support that makes it easy to develop, optimize and deploy AI workloads on Groq silicon. It ensures the user experience is always smooth and development cycles fast and efficient.

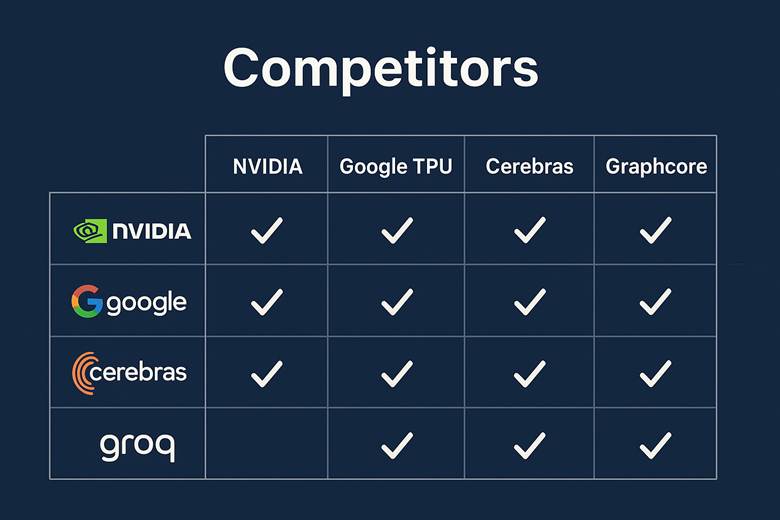

Competitors in the Market

The AI hardware acceleration market is highly competitive. To understand Groq’s place, let’s compare it with some key players:

NVIDIA GPUs – Currently dominate AI training and inference. Extremely versatile but, often face issues with high latency in real-time inference.

- Google TPUs – Specialized for deep learning but heavily tied to Google Cloud infrastructure, limiting flexibility for outside adoption.

- Cerebra’s Systems – Known for its wafer-scale engine (the largest chip in the world). Incredible raw power, but very expensive and not as predictable in timing.

- Graphcore – Provides Intelligence Processing Units (IPUs) with focus on parallelism. Strong contender but lacks the same deterministic guarantees.

Groq, by comparison, may not always offer the absolute highest raw throughput, but it delivers consistency, predictability, and low latency — qualities that competitors often struggle with.

Why Groq Stands Out

The strength of this architecture lies in how it balances simplicity and performance. Here’s why it is gaining attention:

- Deterministic Speed – Developers know exactly how workloads will perform, which is crucial for real-time environments.

- Energy Efficiency – Less wasted power compared to GPUs’ constant context switching.

- Scalability – From a single GroqCard to full racks or even cloud access, it scales easily.

- Ease of Use – The development environment is simplified, reducing learning curves for engineers.

- Industry Focus – Especially effective in finance (real-time trading), healthcare (diagnostics), autonomous vehicles (decision-making), and natural language processing.

In many ways, this approach challenges the “more cores = more power” philosophy, proving that smart design can rival brute force.

Real-World Applications

The true measure of any technology is not just theoretical speed but practical impact. Here are examples of how Groq’s system is being applied:

- Healthcare: Hospitals running diagnostic AI models (like tumor detection from scans) can achieve faster and more reliable results, helping doctors act in real time.

- Finance: High-frequency trading systems rely on predictable millisecond-level responses. LPU’s deterministic latency provides an edge in market performance.

- Autonomous Vehicles: Self-driving cars require instant decision-making. Even a 50-millisecond delay can cause accidents; Groq’s low-latency architecture reduces this risk.

- Large Language Models: When serving LLMs in production, inference often becomes a bottleneck. LPUs allow faster responses, even with large parameter models.

- Defense and Aerospace: Mission-critical systems where reliability is more important than raw power benefit greatly from deterministic computation.

Future of AI Hardware and Inference

AI acceleration is entering what many call the Inference Wars. Training models is one thing, but deploying them at scale, cost-effectively, and reliably is the true challenge. Here’s how things are shaping up:

- Shift from Training to Inference – The industry is realizing that training is rare, but inference happens billions of times daily. Efficiency here matters most.

- Edge AI – Devices from drones to medical scanners need local, low-latency inference. LPUs fit well in this ecosystem.

- Sustainability – Energy efficiency is becoming critical. Architectures like Groq’s may lead the way to greener AI.

- Hybrid Deployments – Many companies will mix GPUs (for training) with LPUs (for inference).

Over the next decade, expect massive competition among NVIDIA, Google, Cerebras, Graphcore, and Groq. But its unique deterministic advantage may help it carve a long-term niche.

Frequently Asked Questions (FAQs)

1. Is Groq only useful for large enterprises?

No. Thanks to GroqCloud, even startups and researchers can access the technology without needing to invest in expensive hardware.

2. How is it different from GPUs?

GPUs rely on thread scheduling and parallel cores, while LPUs use a simplified pipeline, giving more predictable performance.

3. Does it support all AI models?

It supports most modern AI models, especially those focused on inference. Training is still more efficient on GPUs or TPUs.

4. Is it expensive?

While enterprise hardware can be costly, the cloud platform offers cost-effective access.

5. Can it replace GPUs entirely?

Not immediately. GPUs remain essential for training, but LPUs may dominate real-time inference in the future.

Why Groq is a Bet for the Future

It markets itself as a forward-thinking solution by emphasizing simplicity, scalability, and speed. As opposed to the complex architecture-based traditional GPUs, Groq’s Linear Processing Units (LPUs) are built to offer predictable performance with ultra-low latency. Its ecosystem—that spans the GroqCard up to the GroqCloud—is flexible enough both for startups and enterprises so that it can scale with the growing need for AI workloads. This emphasis on accessibility and efficiency makes this a future-proof and trusted alternative within the fast-changing AI environment.

Conclusion

The rise of Groq represents a significant milestone in AI hardware evolution. It challenges the traditional assumption that raw parallelism is the only way to scale performance. By embracing simplicity, determinism, and scalability, it delivers value where it matters most: real-world deployment of AI models.

From healthcare and finance to autonomous systems and large-scale natural language processing, the technology is already proving itself as a next-generation inference accelerator. While competition in the space is fierce, Groq’s unique approach ensures that it is not just another chip maker, but a genuine disruptor in the AI revolution.

As the demand for AI-powered solutions grows exponentially, systems that guarantee predictable, efficient, and scalable performance will define the future and has positioned itself right at the center of that transformation.